The conclusions reached by a study published in a recent issue of PNAS may not be entirely trustworthy. Well, maybe I need to be more specific so as to narrow it down to just one study. The paper I’m referring to is "Heavy use of equations impedes communication among biologists" which appeared in PNAS on July 17th. In this study, authors Tim Fawcett and Andrew Higginson considered papers from select 1998 biology journals and counted (a) the number of citations each paper received, (b) the number of equations detailed in each paper, and (c) each paper’s length in pages. Seriously, pages. Anyway, after collecting and analyzing this data, the researchers found a negative correlation between the number of citations a paper received and the number of equations per page that it had. For every added equation per page, the number of citations decreased by 22%.

Fawcett and Higginson state that their results suggest “a failure of communication that may hinder scientific progress” as in biology, “empirical studies build largely on other empirical studies with little direct reference to relevant theory”. Or to put it another way, biologists are scared of math. This should come as no surprise to anyone with a passing familiarity of biologists. After all, biology is essentially just applied creative writing. The critical question in every biology paper is more or less “How do we imagine cells might work?”. So it’s perfectly understandable that biologists would avoid certain papers at the mere sight of centered text and large parentheses.

Fawcett and Higginson looked further at their results and made two other observations. First, the decrease in citations for papers with more equations…per page was due to a lack of citings from future non-theoretical papers (decrease of 27% per additional equation/page), while there was no correlation between citings from later theoretical papers and the density of equations in cited papers. So non-theoretical papers cite other non-theoretical papers while theoretical papers cite other theoretical papers, a finding which obeys the Ursine Forest Defecation Conjecture. The second key observation the authors made was that the negative correlation between citation quantity and equation density only applied for equations in the main text, as no correlation existed for equations contained in an appendix. This result is especially notable, as it proves that nobody ever reads the appendix of a scientific paper. An appendix could just be the lyrics to 1995 rock hit “Breakfast at Tiffany’s” and the paper would still be accepted to Nature if it has a power law graph somewhere.

Despite Fawson and Higgincett’s groundbreaking work and clear results, there are some in the scientific community who have issues with their findings. The November 6th issue of PNAS contained four separate letters criticizing “Heavy use of equations”, with one letter from Andrew Fernandes stating that the paper “mistakes correlation for causation”. Going beyond the paper’s conclusions—and all the usual stuff about PNAS and prearranged editors and all that dull minutiae, blah, blah, blah—one could ask questions about its methodology as well. Why did the authors only look at journals in ecology and evolutionary biology, instead of all of biology? Why did the authors only study papers from three journals that seem to be chosen arbitrarily? The authors claim they picked journals with higher proportions of theoretical papers, but they use 2011 journal statistics to illustrate this, rather than statistics from the year they actually drew the papers from. On that note, why did the authors only look at papers published in 1998 over any other year or time span? How do we know they didn’t look at papers from other fields/journals/years before finally getting the results they wanted with this set?

And I can’t get over the equations per page thing. There’s so many questions. I mean, I know why they chose to use the density of equations rather than pure number of equations (because there was no correlation with raw number of equations) but how did they handle the page count part? Did they use fractional numbers of pages or did they round up to the nearest page? What if a paper started halfway down the page in the journal? If they didn’t use the original journals, did they print out the pages themselves or did they just use the Microsoft Word information box? What size font did they use? How big were the margins? Letter or legal? Times or Arial? I need to know these things!

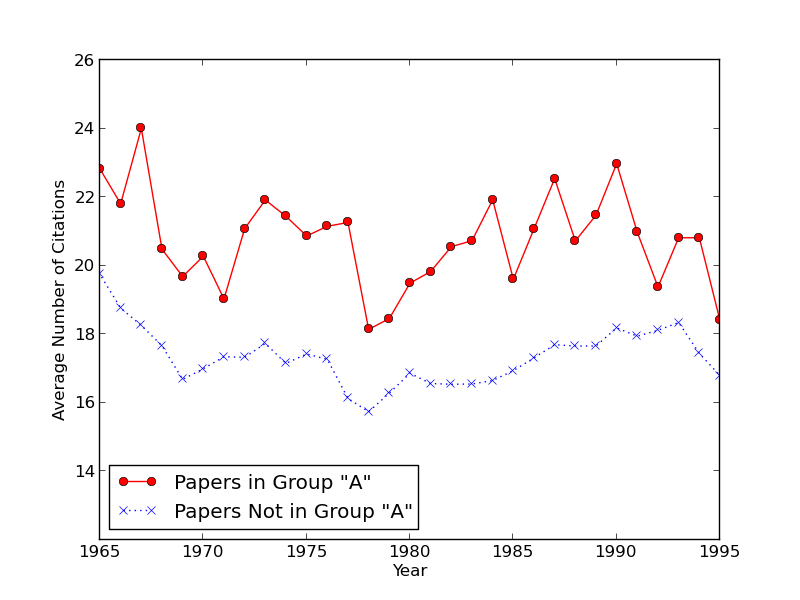

Regardless of any perceived and slight failings in analysis and conclusions, “Heavy use of equations” is an obviously brilliant scientific work. Moreover, it has inspired me to do my own cursory and selective analysis on the citation database. And thanks to the speed at which one can draw these frivolous correlations, I’ve already extracted my first result, which you can see in this figure:

The red line depicts the average number of citations for papers that all have a common characteristic, which I call Group “A” of papers. The blue line depicts the average number of citations for papers that do not have this characteristic and thus are not in Group “A”. Any guesses as to what characteristic all papers in Group “A” have in common? I’ll give you some time to think about it.

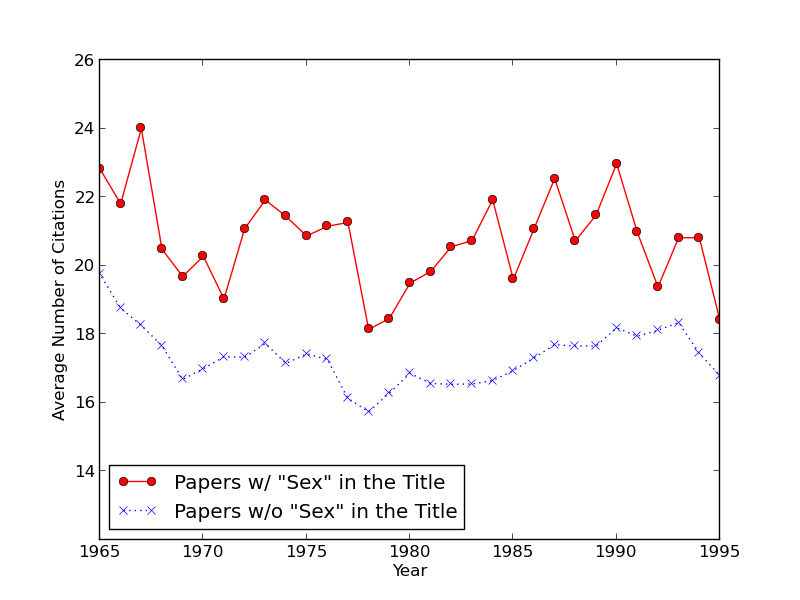

Time’s up. Here’s the official figure:

Group “A” is the set of papers with titles that contain the word “SEX”, either by itself or as part of a longer word like “SEXUALITY”. From 1965 to 1995, there are 62,167 papers with “SEX” in the title (accounting for 0.47% of all papers in that time span), from 489 papers in 1965 (0.33% of all papers in that year) to 3,316 in 1995 (0.48%). As you can see from the figure, for each year from 1965 to 1995, the papers that have “SEX” in the title are cited more on average that papers that do not have “SEX” in the title. Papers that contain “SEX” in the title have on average 3.4 more citations than papers that do not.

Sure, I’ll admit there are some issues with my analysis, like the how a simple parsing for “SEX” in scientific paper titles would incorrectly assign papers with unsexy titles like “Soil erosion of riverbanks in Essex, England”. (Of course Fawcett and Higginson’s method of designating papers as theoretical or non-theoretical by searching abstracts for keywords was accurate only 85% of the time, so I have confidence in the spurious accuracy of my method.) Aside from that, I am happy to ignore my selective choices for the time span (“SEX” papers performed worse in 1964 and smaller average citation counts for both groups from 1996 on mean that I would have to rescale the plot’s y-axis and that would look weird) and the lack of statistical testing (couldn’t be bothered).

I feel that my analysis should shake up the scientific field, as I fully expect more researchers to try to work “sex” into article titles so as to gain added attention and more citations. This need not be limited to just biologists, as even chemists, physicists, and mathematicians can find a way to put “sex” in their papers with enough creative thinking. For example:

Clever! And that’s an equation that even biologists can get behind.

—Max Wasserman